We recently covered an article on Using AI for Market Abuse Surveillance, a very interesting use of Machine Learning (ML) to improve the classification of alarms generated in market surveliance.

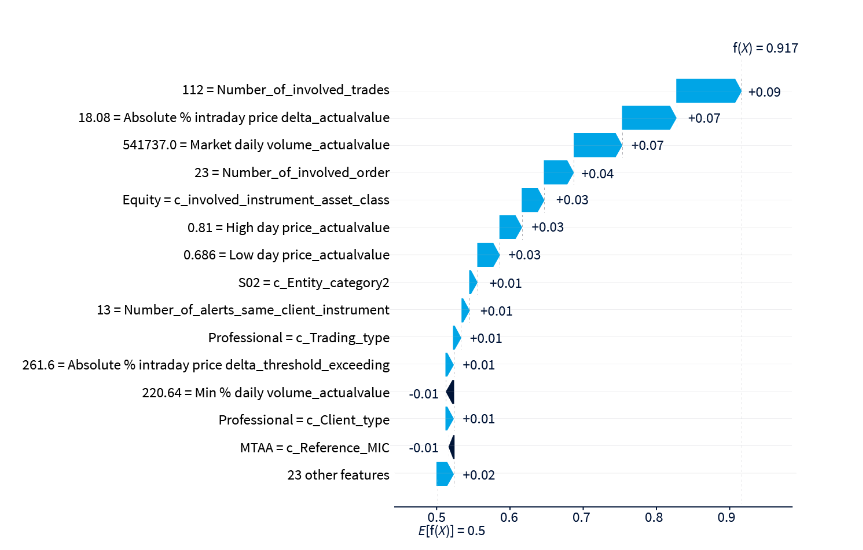

One of the issues with ML is the lack of transparency, due to the “black-box” nature of the models. So you get an answer, but without an explanation of the reasoning to get to the answer.

So it is with great interest that I read a follow up to that article on the ION Markets Blog, titled Improving transparency in machine learning models for market abuse detection. This describes a technique using SHapley Additive exPlanations (SHAP) values, derived from game theory to unravel the complexities of ML models.

The picture below from the article, provides a nice schematic of SHAP values and a waterfall plot, providing much needed transparency for the answer/classification.

Please read the article at Improving transparency in machine learning models for market abuse detection.